Storage is an area where you can never know too much. For many infrastructures storage is the most likely cause of performance issues and a source of complexity and misconfiguration – especially given that many VI admins come from a server background (not storage) due to VMware’s server consolidation roots.

Knowledge

- Identify RAID levels

- Identify supported HBA types

- Identify virtual disk format types

Skills and Abilities

- Determine use cases for and configure VMware DirectPath I/O

- Determine requirements for and configure NPIV

- Determine appropriate RAID level for various Virtual Machine workloads

- Apply VMware storage best practices

- Understand use cases for Raw Device Mapping

- Configure vCenter Server storage filters

- Understand and apply VMFS resignaturing

- Understand and apply LUN masking using PSA?related commands

- Analyze I/O workloads to determine storage performance requirements

Tools & learning resources

- Fibre Channel SAN Configuration Guide

- iSCSI SAN Configuration Guide

- ESX Configuration Guide

- ESXi Configuration Guide

- vSphere Command?Line Interface Installation and Scripting Guide

- I/O Compatibility Guide

- vscsiStats, vicfg-*, vifs, vmkfstools, esxtop/resxtop

Identify RAID levels

Common RAID types: 0, 1, 5, 6, 10. Wikipedia do a good summary of the basic RAID types if you’re not familiar with them. Scott Lowe has a good article about RAID in storage arrays, as does Josh Townsend over at VMtoday.

The impact of RAID types will vary depending on your storage vendor and how they implement RAID. Netapp (which I’m most familiar with) using a proprietary RAID-DP which is like RAID-6 but without the performance penalties (so Netapp say).

Scott Lowe has a good article about RAID in storage arrays, as does Josh Townsend over at VMtoday.

Supported HBA types

This is a slightly odd exam topic – presumably we won’t be buying HBAs as part of the exam so what’s there to know? The best (only!) place to look for real world info is VMware’s HCL (which is now an online, searchable repository). Essentially it comes down to Fibre Channel or iSCSI HBAs.

Remember you can have a maximum of 8 HBAs or 16 HBA ports per ESX/ESXi server.You should not mix HBAs from different vendors in a single server. It can work but isn’t officially supported.

Identify virtual disk format types

Virtual disk (VMDK) format types:

- Eagerzeroedthick

- Zeroedthick (default)

- Thick

- Thin

Three factors primarily determine the disk format;

- Initial disk size

- Blanking underlying blocks during initial creation

- Blanking underlying blocks when deleting data in the virtual disk (reclamation)

The differences stem from whether the physical files are ‘zereod’ or not (ie where there is no data in the ‘virtual’ disk what in the underlying VMDK?). Several features (such as FT and MSCS) require an ‘eagerzeroedthick’ disk. Check out this great diagram (courtesy of Steve Foskett) which shows the differences.

The other possible type is an RDM which itself can have two possible types;

- RDM (virtual) – enables snapshots, vMotion but masks some physical features

- RDM (physical) – required for MSCS clustering and some SAN applications

DirectPath I/O

Lets a VM bypass the virtualisation layer and speak directly to a PCI device. Benefits are reduced CPU on the host, and potentially slightly higher I/O to a VM when presenting a 10GB NIC, alternatively you could present a physical USB device directly to a VM (see this example at Petri.nl, link courtesy of Sean Crookston’s study notes)

Requirements

- Intel Nehalem only (experimental support for AMD)

- Very limited device support (10GB Ethernet cards, and only a few). As usual the list of devices which work will be much larger than the officially certified HCL (the quad port card for my HP BL460G6 worked fine as do USB devices)

- Once a device is used for passthrough it’s NOT available to the host and therefore other VMs

Configuring the host (step 1 of 2)

- Configure PCI device at host level (Configuration -> Advanced Settings under Hardware). Click ‘Configure Passthrough’ and select a device from the list.

NOTE: If the host doesn’t support DirectPath a message to that effect will be shown in the display window. - Reboot the host for the changes to take effect.

Configuring the VM (step 2 of 2)

1. Edit the VM settings and add a PCI Device.

NOTE: The VM must be powered off.2. Select the passthrough device from the list (which only shows enabled devices). There is a warning that enabling this device will limit features such as snapshots and vMotion).

If you want in-depth information about VMDirectPath read this VMware whitepaper. According to the ESXi Configuration Guide adding a VMDirectPath device to a VM sets it’s memory reservation to the size of allocated memory but I forgot to confirm it in my lab (see p62).

NPIV

Stands for N-Port ID Virtualisation. This allows a single HBA adaptor port (provided it supports NPIV) to register multiple WWPN’s with the SAN fabric, rather than the single address normally registered. You can then present one of these WWPN’s directly to a VM, thus allowing you to zone storage to a specific VM rather than a host (which is normally the only option). Read more in Scott Lowe’s blogpost, Jason Boche’s (in depth) blogpost, Simon Long’s post, and Nick Triantos’ summary. They left me wondering what the real world benefit is to VI admins!

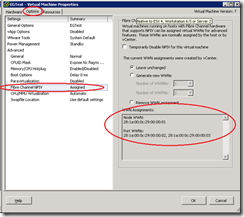

To use NPIV;

- In the VM properties, to go Options -> NPIV.

NOTE: These options will only be enabled if the VM has an RDM attached. Even if enabled it does not guarantee that the HBA/switches support NPIV. - For a new VM, click ‘Generate new WWNs’

- For an existing VM (which is already NPIV enabled) click either;

- ‘Generate WWNs’ to change the WWN assigned to this VM

- Temporarily Disable WWN

- Remove WWN

You’ll also have to add the newly generated WWPN’s to your SAN zones and storage array masking (Initiator groups in the case of Netapp).

You’ll also have to add the newly generated WWPN’s to your SAN zones and storage array masking (Initiator groups in the case of Netapp).

NPIV Requirements

- HBAs and SAN switches must support NPIV.

- NPIV only works with RDM disks

- svMotion on an NPIV enabled VM is not allowed (although vMotion is)

RDM

Joep Piscaer has written up a good summary of RDMs, and from that article –“RDM’s gives you some of the advantages of direct access to a physical device while keeping some advantages of a virtual disk in VMFS. As a result, they merge VMFS manageability with raw device access”.

Use cases include;

- Various types of clustering including MSCS (see section 4.2) and Oracle OCFS/ASM

- NPIV

- Anytime you want to use underlying storage array features (such as snapshots). Some SAN management software needs direct access to the underlying storage such as Netapp’s SnapManager suite for Exchange and SQL.

Two possible modes;

- Virtual compatibility. Virtualises some SCSI commands, supports snapshots

- Physical compatibility. Passes through almost all SCSI commands, no snapshots.

Created;

- like any other VMDK through the VI client, then select RDM and choose mode

- using vmkfstools -z or vmkfstools -r (see section 1.2 for details)

- requires block storage (FC or iSCSI)

NOTE: When cloning a VM with RDM’s (in virtual compatibility mode) they will be converted to VMDKs. Cloning a VM with an RDM in physical compatibility mode is not supported.

Storage Filters

Storage filters are used to adjust default vCenter behaviour when scanning storage. See this post about storage filters at Duncan Epping’s site.

There are four filters (all of which are enabled by default);

- Host rescan (config.vpxd.filter.hostrescanFilter)

- RDM filter (config.vpxd.filter.rdmFilter)

- VMFS (config.vpxd.filter.vmfsFilter)

- Same hosts and transport (config.vpxd.filter.SameHostAndTransportsFilter)

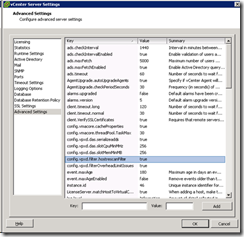

Configuring storage filters is done in vCenter (not per host);

- Go to Administration -> vCenter Settings -> Advanced Settings

- Add a key for the filter you want to enable and set the key to FALSE or TRUE.

NOTE: All filters are enabled by default (value is TRUE) even if not specifically listed.

Turning off the ‘Host Rescan’ filter does NOT stop newly created LUNs being automatically scanned for – it simply stops each host automatically scanning when newly created VMFS Datastores are added on another host. This is useful when you’re adding a large number of VMFS Datastores in one go (200 via PowerCLI for example) and you want to complete the addition before rescanning all hosts in a cluster (otherwise each host could perform 200 rescans). See p50 of the FC SAN Configuration Guide.

One occasion where a VMFS filter might be useful is extending a VMFS volume. With vSphere this is now supported but I’ve had intermittent success when expanding a LUN presented by a Netapp array. The LUN (and underlying volume) has been resized OK but when I try to extend the VMFS no valid LUNs are presented. Next time this happens I can try turning off the storage filters (VMFS in particular) and see if maybe the new space isn’t visible to all hosts that share the VMFS Datastore.

VMFS Resignaturing

LUN Resignaturing is used when you present a copy of a LUN to an ESX host, typically created via a storage array snapshot. Been around since VI3 but ease of use has increased since.

NOTE: This doesn’t apply to NFS datastores as they don’t embed a UUID in the metadata.

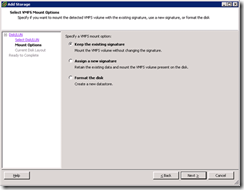

Resignaturing a LUN copy using the VI Client

- Click Add Storage on a host and select the LUN copy.

- On the next screen choose either;

- Keep existing signature. This can only be done if the original VMFS Datastore is offline or unavailable to this host (you’re trying to mount a mirrored volume at a DR site for example).

NOTE: If you try and the other VMFS Datatstore is accessible you’ll get an error stating that the host configuration change was not possible and the new datastore won’t be mounted. - Assign a new signature (data is retained). This is persistent and irreversible.

- Format the disk. This assigns a new signature but any existing data IS LOST.

- Keep existing signature. This can only be done if the original VMFS Datastore is offline or unavailable to this host (you’re trying to mount a mirrored volume at a DR site for example).

Resignaturing a LUN copy using the command line (use vicfg-volume from RCLI or vMA)

- ‘esxcfg-volume –l’ to see a list of copied volumes

- Choose either;

- ‘esxcfg-volume –r <previous VMFS label | UUID> to resignature the volume

- ‘esxcfg-volume –M <previous VMFS label | UUID> to mount the volume without resignaturing (use lower case m for temporary mount rather than persistent).

Full details for these operations can be followed in VMwareKB1011387

LUN Masking

This can be done in various ways (Netapp implement LUN Masking through the use of Initiator Groups) but for the VCAP-DCA they’re referring to PSA rules (ie at the VMkernel). For a good overview of both why you might want to do this at the hypervisor layer (and how) see this blogpost at Punching Clouds.

You can mask;

- Complete storage array

- One or more LUNs

- Paths to a LUN

To mask a particular LUN (for example);

- Get a list of existing claim rules (so you can get a free ID for your new rule);

NOTE: You can skip this step if you use –u to autoassign a new IDesxcli nmp claimrule list

- Create a new rule to mask the LUN;

esxcli corestorage claimrule add --rule <number> -t location -A <hba_adapter> -C <channel> -T <target> -L <lun> -P MASK_PATH For example; esxcli corestorage claimrule add --rule 120 -t location –A vmhba1 -C 0 –T 0 -L 20 -P MASK_PATH

- Load this new rule to make MASK_PATH module the owner;

esxcli corestorage claimrule load

- Unclaim existing paths to the masked LUN. Unclaiming disassociates the paths from a PSA plugin. These paths are currently owned by NMP. You need to dissociate them from NMP and have them associated to MASK_PATH;

esxcli corestorage claiming reclaim –d 600c0ff000d5c3835a72904c01000000

NOTE: The device ID above is the ‘naa’ code for the LUN. Find it using esxcfg-scsidevs –-vmfs.

- Run the new rules

esxcli corestorage claimrule run

- Verify that the LUN/datastore is no longer visible to the host.

esxcfg-scsidevs --vmfs

This is a pretty convoluted procedure which I hope I don’t have to remember in the exam! VMwareKB1009449 describes this process in detail and it’s also documented on p82 of the FC SAN Configuration Guide and p96 of the Command Line Interface Installation and Reference Guide (both of which should be available during the exam).

To determine if you have any masked LUNs;

- List the visible paths on the ESX host and look for any entries containing MASK_PATH

esxcfg-mpath –L | grep –I mask

Obviously if you want to mask a LUN from all the hosts in a cluster you’d have to run these commands on every host. William Lam’s done a useful post about automating esxcli. There’s also an online reference to the esxcli command line.

NOTE: It’s recommended that you follow the procedure above EVERYTIME you remove a LUN from a host! So if you have 16 hosts in a cluster and you want to delete one empty LUN you have to

- Mask the LUN at the VMkernel layer on each of the hosts

- Unpresent the LUN on your storage array

- Cleardown the masking rules you created in step 1 (again for every host)

Seem unbelievable? Read VMwareKB1015084…

Analyse I/O workloads

There are numerous tools to analyse I/O – IOMeter, vSCSIStats, Perfmon, esxtop etc . Things to measure;

- IOPs

- Throughput

- Bandwidth

- Latency

- Workload pattern – percentage reads vs writes, random vs sequential, packet sizes (small vs large)

- IO adjacency (this is where vscsiStats comes in)

There’s a useful post at vSpecialist.co.uk, plus some good PowerCLI scripts to collect metrics via esxtop (courtesy of Clinton Kitson)

VMware publish plenty of resources to help people virtualise existing apps, and that includes the I/O requirements (links courtesy of Scott Lowe);

Storage best practices

How long is a piece of string?

- VMware white paper on storage best practices

- Storage Best Practices for Scaling Virtualisation Deployments (TA2509, VMworld 2009)

- Best Practices for Managing and Monitoring Storage (VM3566, VMworld ’09)

- Storage Best Practices and Performance Tuning (TA8065, VMworld 2010, subscription required at time of writing)

- Netapp and vSphere storage best practices (including filesystem alignment)

- Read Scott Lowe’s Mastering VMware vSphere 4 book, chapter 6 (where he specifically talks about storage best practices)

- Stephen Fosket’s presentation about thin provisioning

Further Reading

- Storage Best Practices for Scaling Virtualisation Deployments (TA2509, VMworld 2009)

- Best Practices for Managing and Monitoring Storage (VM3566, VMworld ’09)

- Storage Best Practices and Performance Tuning (TA8065, VMworld 2010, subscription required)

- Sean Crookston’s study notes

4 thoughts on “VCAP-DCA Study notes – 1.1 Implement and manage complex storage”