As with all my VCAP5-DCA study notes, the blogposts only cover material new to vSphere5 so make sure you read the v4 study notes for section 1.1 first. When published the VCAP5-DCA study guide PDF will be a complete standalone reference.

Knowledge

- Identify RAID levels

- Identify supported HBA types

- Identify virtual disk format types

Skills and Abilities

- Determine use cases for and configure VMware DirectPath I/O

- Determine requirements for and configure NPIV

- Determine appropriate RAID level for various Virtual Machine workloads

- Apply VMware storage best practices

- Understand use cases for Raw Device Mapping

- Configure vCenter Server storage filters

- Understand and apply VMFS resignaturing

- Understand and apply LUN masking using PSA-related commands

- Analyze I/O workloads to determine storage performance requirements

- Identify and tag SSD devices

- Administer hardware acceleration for VAAI

- Configure and administer profile-based storage

- Prepare storage for maintenance (mounting/un-mounting)

- Upgrade VMware storage infrastructure

Tools & learning resources

- Product Documentation

- vSphere Client / Web Client

- vSphere CLI

- vscsiStats, esxcli, vifs, vmkfstools, esxtop/resxtop

- vSphere storage profiles on VMwareTV

- Good blogpost on NPIV (though nothing’s changed since v4)

- Chapter 6 of Scott Lowe’s Mastering VMware vSphere5 book

- VMworld sessions: VSP3868 – VMware vStorage Best Practices, VSP2774 – Supercharge your VMware deployment with VAAI

- Gregg Robertson’s study notes for 1.1

With vSphere5 having been described as a ‘storage release’ there is quite a lot of new material to cover in Section1 of the blueprint. First I’ll cover a couple of objectives which have only minor amendments from vSphere4.

Determine use cases for and configure VMware DirectPath I/O

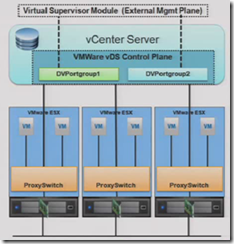

The only real change is DirectPath vMotion, which is not as grand as it sounds. As you’ll recall from vSphere4 a VM using DirectPath can’t use vMotion or snapshots (or any feature which uses those such as DRS and many backup products) and the device in question isn’t available to other VMs. The only change with vSphere5 is that you can vMotion a VM provided it’s on Cisco’s UCS and there’s a supported Cisco UCS Virtual Machine Fabric Extender (VM-FEX) distributed switch. Read all about it here – if this is in the exam we’ve got no chance!

Identify and tag SSD devices

This is a tricky objective if you don’t own an SSD drive to experiment with (although you can workaround that limitation). You can identify an SSD disk in various ways;

- Using the vSphere client. Any view which shows the storage devices (‘Datastores and Datastore clusters view’, Host summary, Host -> Configuration -> Storage etc) includes a new column ‘Drive Type’ which lists Non-SSD or SSD (for block devices) and Unknown for NFS datastores.

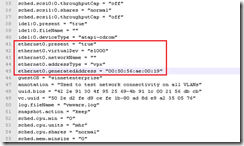

- Using the CLI. Execute the following command and look for the ‘Is SSD:’ line for your specific device;

esxcli storage core device list

Tagging an SSD should be automatic but there are situations where you may need to do it manually. This can only be done via the CLI and is explained in this VMware article. The steps are similar to masking a LUN or configuring a new PSP;

- Check the existing claimrules

- Configure a new claim rule for your device, specifying ‘ssd_enable’

- Enable to new claim rule and load it into memory

So you’ve identified and tagged your SSD, but what can you do with it? SSDs can be used with the new Swap to Host cache feature best summed up by Duncan over at Yellow Bricks;

“Using “Swap to host cache” will severely reduce the performance impact of VMkernel swapping. It is recommended to use a local SSD drive to eliminate any network latency and to optimize for performance.”

As an interesting use case here’s a post describing how to use Swap to Host cache with an SSD and laptop – could be useful for a VCAP home lab!

The above and more are covered very well in chapter 15 of the vSphere5 Storage guide.