This objective is focused on the VMs rather than the hosts but there’s still a large overlap between this objective and the previous one.

Knowledge

- Compare and contrast virtual and physical hardware resources

- Identify VMware memory management techniques

- Identify VMware CPU load balancing techniques

- Identify pre?requisites for Hot Add features

Skills and Abilities

- Calculate available resources

- Properly size a Virtual Machine based on application workload

- Configure large memory pages

- Understand appropriate use cases for CPU affinity

Tools & learning resources

- Product Documentation

- vSphere Client

- Performance Charts

- vSphere CLI

- resxtop/esxtop

- VMworld 2010 session TA7750 Understanding Virtualisation Memory Management (subscription required)

- VMware’s Performance Best Practices white paper

Identify memory management techniques

The theory – read the following blogposts;

- Frank Denneman’s impact of memory reservations

- Duncan Epping’s ‘memory limits’ post (and work your way through the comments!)

The following memory mechanisms were covered in section 3.1 so I won’t duplicate;

- transparent page sharing

- ballooning (via VMTools)

- memory compression (vSphere 4.1 onwards)

- virtual swap files

- NUMA

There are also various mechanisms for controlling memory allocations to VMs;

- reservations and limitations

- shares – disk, CPU and memory

- resource pools (in clusters)

Disable unnecessary devices in the VM settings (floppy drive, USB controllers, extra NICs etc) as they have a memory overhead.

CPU load balancing techniques

Read VMware vSphere™ : The CPU Scheduler in VMware® ESX™ 4.1 and Frank Denneman’s blogpost to understand the theory.

- NUMA architectures (see section 3.1.9 for full details). Don’t allocate a VM more vCPUs than per NUMA node.

- Hyperthreading

- Relaxed co-scheduling

Use cases for CPU affinity

- licencing (although some companies such as Oracle still don’t recognise it) where it’s based on per physical CPU

- copy protection schemes which bind applications to a CPU (SafeEnd, FireDaemon etc)

Disadvantages

- Breaks NUMA optimisations (see Frank Denneman’s post)

- A VM with CPU affinity set cannot be vMotioned, hence it can’t be configured for a VM in a fully automated DRS cluster either.

Hot Add prerequisites

- Not enabled by default.

- Guest OS support (Check Jason Boche’s blogpost for details of guest OS and hotplug)

- Memory and CPUs can be hot added (but not hot removed) but not all devices can

- Enabled per VM and needs a reboot to take effect (ironically!).

- Enable on templates

- Virtual h/w v7

- Not compatible with Fault Tolerance – use one of the other.

Calculate available resources

There are various places to check available resources;

- At the host level

- The summary tab shows a high level view of free CPU and memory (with TPS savings accounted for)

- The Resources tab shows more in-depth information broken down per VM

- On resource pools

- Check the reservations and limits set for the resource pool. Is it unlimited (the default) and expandable?

- At cluster level

- Use the DRS distribution chart to understand the resource allocation

One of the most common support issues is troubleshooting why a particular VM can’t be powered on, typically with the error that it failed admission control. Check resource pool settings!

- In the VI client check the VM requirements – CPU, RAM, reservations, limits etc

- In a cluster check if admission control is enabled (Edit Settings -> HA)

- Check the parent resource pool to see if it has reservation or limits set

- Check shares for the VM – if it’s in a cluster you can check it’s ‘worst case allocation’ on the Resource tab.

NOTE: One common misconfiguration is to have VMs in the root resource pool. This can completely skew the resource allocation because it’ll get a percentage of the root resource pool.

NOTE: Shares are relative to other VMs on the same host and only apply when there’s contention. Food for thought from Duncan Epping.

NOTE: When admission control checks available memory (to guarantee to a VM as it’s being powered on) it uses ‘machine memory’ rather than ‘guest physical memory’. This means TPS savings are factored into admission control. Explained by Frank Denneman.

Make sure you remember your VCP knowledge about resource pools;

- expandable reservations – the child resource pool can also use spare resources from its parent resource pool

- fixed reservations – the child resource pool has a fixed limit and attempt to allocate more resources will be denied.

Some good thoughts in this VMware communities thread by Jase McCarty and another Frank Denneman post.

Properly size a VM based on application workload

Refer to section 3.1 regarding tuning memory, CPU, network and storage. Memory is often the main resource to get right. Use either vCenter or esxtop to monitor the memory statistics below;

- Active memory is key – shows an estimated amount of RAM needed by the VM

- Check ballooned or swapped memory

- Ballooning is probably OK

- Swap is almost certainly BAD!

NOTE: Some applications will grab as much memory as they can (or are configured to) and manage it themselves rather than leaving it to the guest OS – Java and Oracle are typical examples. Unfortunately this makes tuning the VM difficult as neither VMware or the guest OS know what memory is in use (and therefore can be paged/ballooned).

Check using esxtop that a vSMP server is equally balancing CPU across the vCPUs – otherwise it maybe misconfigured or simply not using multithreaded apps (in which case you could consider decreasing vCPU allocation). Consider HAL when doing this.

Configuring large memory pages

From the VMware Performance Best Practices whitepaper;

“In addition to the usual 4KB memory pages, ESX also makes 2MB memory pages available (commonly referred to as “large pages”). By default ESX assigns these 2MB machine memory pages to guest operating systems that request them, giving the guest operating system the full advantage of using large pages.”

VMware recommend that when hardware memory virtualisation is enabled you also enable large page support in the guest OS (ESX is enabled by default). Some estimates show a 10-20% performance increase for large memory pages – more in the VMware white paper on large page performance.

If you want to know more check this good blogpost on large memory pages by Forbes Guthrie and follow his links for some interesting discussion.

Enabling/disabling large pages in ESX

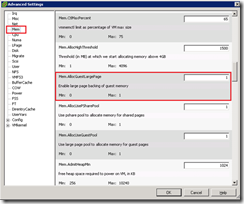

vSphere automatically enables large pages but you can disable it if you want. Go to Configuration -> Software -> Advanced Settings (this applies to all VMs on the host). After changing this setting you’ll need to vMotion your VMs off and back onto the host for the memory to be reallocated into small pages.

NOTE: You can also override this setting per VM by adding the following value in the .VMX file;

monitor_control.disable_mmu_largepages = FALSE (from this thread)

To enable large pages in the guest OS

See vendor documentation (W2k3 and RHEL4 are also covered in the VMware white paper on large page performance);

- Windows 2003 – enable permission to ‘Lock memory’ in Local Security Policy snapin. Assign rights to the appropriate user (maybe a SQL account if using large pages with SQL server for example)

- Linux – ‘echo 1024 > /proc/sys/vm/nr_hugepages’

Impact on TPS

TPS doesn’t work with large memory pages, so on Nehalem servers (or any server with hardware assisted memory virtualisation) the memory saving via TPS are minimised until there is memory contention. When memory is overcommitted TPS can break down a large memory page into small pages so TPS benefits can still be realised. (VMwareKB1021095)

NOTE: The impact on TPS only comes into play if you’ve enabled large pages in BOTH ESX and the guest OS. If it’s only enabled in ESX but the guest OS doesn’t request large pages, small pages will still be used and TPS will kick in as usual.

Application support for large pages

Consider what applications are running and whether they benefit from large pages – otherwise it’s all a waste of time!

Nice article! Thanks for referring to my blogpost about using large pages with SQL Server 2008.

Good article!